The Struggle for Accuracy

If there is one common question that product and engineering leaders ask us, it is: “How is Crux able to produce accurate answers even with multiple joins and a large dataset?”

You'd know what I'm talking about if you've said or heard any of this:

- “LLMs generate accurate queries with 2-3 joins but start hallucinating with larger data”

- “The context length exceeds 32K when I try to send the entire data schema to the LLM”

- “LLMs don't have a full understanding of the metrics used in my team and industry”

Within 60 days of launch, thousands of questions have been asked on Crux which range across domains like pharmaceutical manufacturing, e-commerce advertising, logistics and supply chain, sales intelligence, and more.

We have successfully managed to answer them with an accuracy of more than 98%.

Want to know how? We have decided to spill the beans.

Are LLMs to blame?

With these issues coming up, one could be quick to dismiss the current LLMs by saying that they are still in the 'nascent stages' and are 'not ready to be used in a production-ready use case'.

Should we wait till context lengths go up to 100K?

Should we wait for the release of industry-specific LLMs?

Turns out, the answer is no.

Why do LLMs behave this way? We found 3 fundamental problems:

- Users ask vague questions which doesn’t reveal their true intent

- LLMs don’t understand industry-specific and company-specific context

- LLMs have a limited context length which restricts the size of datasets you can use

We solved each of these problems separately, by creating dedicated agents. In this article, we shall dive deeper into the very first, and perhaps the most crucial problem.

Users Ask Vague Questions

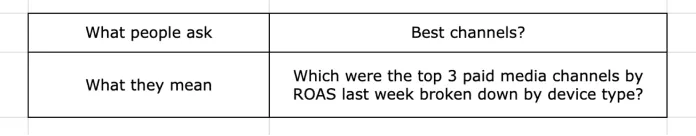

'Asking the right question' is by far the most difficult part when it comes to any decision, task, or action. When we had a look at the questions being asked on Crux, here are the top examples that sprung up:

- Marketing SaaS: "Which channels are working best? How can I improve my revenue?"

- Logistics SaaS: "Which of my fleets are under-utilized? Should I get rid of them?"

- Manufacturing SaaS: "Can I manufacture 1 million tablets of X this month? Why not?"

These seem like vague questions, with no definitions for "best", "improve", "under-utilized" and many other words. But more often than not, analytics begins with vague questions.

Users seemed to ask whatever was going on in their mind and it seemed counter-intuitive to them to be very specific in their ask.

If not given specific information, LLMs tend to assume the rest and come up with answers that don't match the user's expectations. And then, we tend to claim that "LLMs hallucinate"

It became clear that the step 1 to hit accuracy was to nail down the user's intent very clearly. Generating an accurate SQL query for a command that was not fully correct was a futile effort.

Making LLMs think like a Human

Through all these years, whenever humankind has been stuck on a problem, we have turned to nature to find a way just like the Wright Brothers took inspiration from birds.

When it comes to AI, we take inspiration from the human mind. The most advanced LLMs are built on neural networks which aspire to resemble the neurons in our brains.

So we did the same. We tried to get LLMs to behave in a way that was similar to humans.

What does a smart data analyst do when you ask them to fetch some data, or uncover some insight, or create a projection? They ask you questions. They keep asking you questions until they know every piece of information they need and when they get you the data, it's perfect.

In fact, what separates people who are great at analytics Vs the rest, is the unwavering itch to keep asking questions. They would rather piss you off at the very start than get you the wrong data.

We're proud to introduce the industry's first 'Clarification Agent'

Crux's Clarification Agent behaves exactly like a smart data analyst who has the complete context of the business from every team - product, customer success, sales and has the complete context of how and where data is stored - from documentation, and data engineers.

Clarification Agent in Action

Let's look at how the Clarification Agent works in action:

e.g. Let's say you are part of a SaaS team that optimizes advertising campaigns for brands. Your customer is a CMO who wants to analyze buying partners and decide which products to double down on, for the upcoming holiday season.

Step 1: First Clarification

Here is how Crux responds:

Crux asks a clarifying question to understand what the user means by “best”

We make it easier to respond by providing options that are most likely to be selected

These options are carefully selected by looking into past questions and values present in the database to make sure that the user’s intent is fully captured. It also remembers these definitions for future conversations

Let’s say they pick: “Users who have spent the most money”

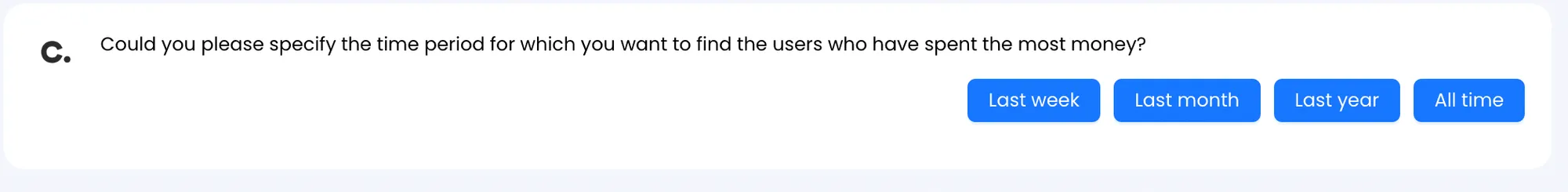

Step 2: Second Clarification

Crux stores your previous response and asks another question:

Since “spending the most money” is a variable that is dependent on time, Crux clarifies if the user has a specific time period in mind.

Let’s say they pick: “Overall”

In case the user feels that their preferred option is not mentioned by the clarification agent, they can choose to type that in, separately.

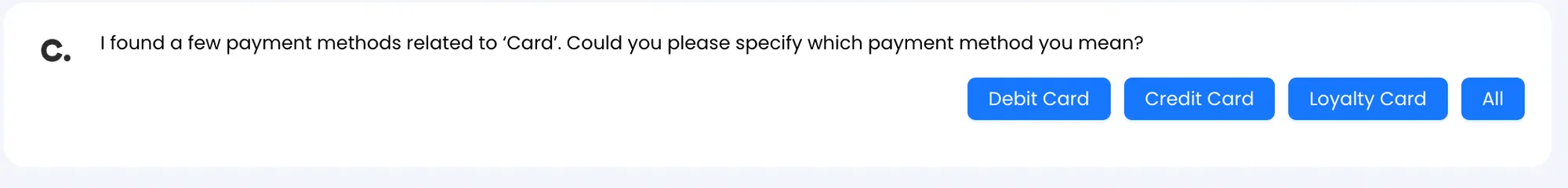

Step 3: Checking Validity of the Question

User may have mentioned ‘Card’ as the payment method which relates to multiple options in the database. In that case, Crux will clear the confusion and specifically ask which option you mean.

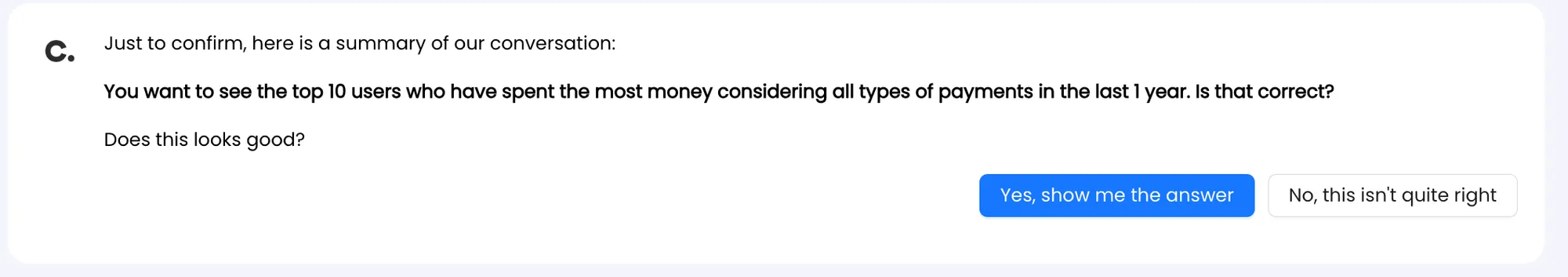

Step 4: Final Confirmation

Crux keeps on asking clarification questions until it has fully grasped the full user intent behind the question. Once it does, it displays the final understanding:

Once the user confirms that this is exactly what they had in mind, the Clarification Agent passes on the mantle to the Classifer Agent who then breaks down the question into specific commands and identifies the exact dataset that needs to be queried.

More on that in the next article.

Other Benefits of Clarification Agent

The intention behind introducing the Clarification Agent was primarily to improve accuracy but it came with a lot more benefits than we intended:

Users now have a lot more ideas about what they could be asking

Users generally have some understanding of how they want to segregate or break down the data, but the suggestions provided by the Clarification Agent really opens up their imagination about what they could be asking - potentially leading to a better insight

Admins can control the extent of data access each user has

Admins and managers can control the access level to each dataset at an org-level, function-level, or user-level. This ensures that when a user with restricted access asks a question outside their purview, they are denied access to the response until the admin approves

Users can get to their insight much faster

Without the clarification layer, they would have to wait the entire time while Crux made assumptions, chose tables, generated SQL, and fetched the data until they knew if Crux had really gotten the correct meaning

Now with the Clarification Agent, if they are asking for something that doesn't exist they know instantly and can modify their ask without having to wait for a whole cycle

How we Measured the Accuracy

Selection of Dataset:

We picked 3 databases from our partners, each belonging to a different industry.

1) Advertising:

33 tables containing data about user details, product catalog, inventory status, order and payment details, campaign performance metrics, etc.

2) Pharmaceutical Manufacturing:

55 tables containing data about facilities, maintenance schedule, equipment performance, raw material inventory, production plan, vendor & supply information, employee efficiency & skill level, etc.

3) Logistics & Supply Chain:

46 tables containing data about account details, delivery schedules, real-time order tracking information, delivery partner performance, etc.

Questions Asked:

Over the course of 1 week, multiple users from their organizations asked around 1223 questions on Crux which were logged and assessed by our team. Examples of some of the most complex questions asked include:

1) Advertising:

Is there an improvement in the efficiency of the low-performing keywords for Account 1 on Amazon over the last quarter?

2) Pharmaceutical Manufacturing:

Can we manufacture 1,506,000 tablets of ‘Tablet X’ at ‘Facility Y’? By when can we complete production?

3) Logistics & Supply Chain:

Why is there an increase in delivery time in the West region?

Assessment of Responses:

We changed the configuration of the models in phases such that questions could be equally divided into 3 buckets:

- Answered only using the base LLM - 411 questions

- Answered through Crux (without using the clarification agent) - 403 questions

- Answered through Crux (including the clarification agent) - 409 questions

For each question, our team manually assessed each query to determine whether the response was accurate and in line with user expectations. We did this using 2 mechanisms:

- Like/Dislike option - to consider the user’s opinion on the correctness of the response

- Manual Assessment - checking each query manually and putting it in either of two buckets

We replicated this exercise for each of the 3 base LLMs on Crux’s backend infrastructure.

Explore more from GetCrux:

- How GetCrux automates creative tagging and unlocks performance insights

- GetCrux vs Motion vs Madgicx: Who wins?

- How GetCrux automates creative insights for Meta ads